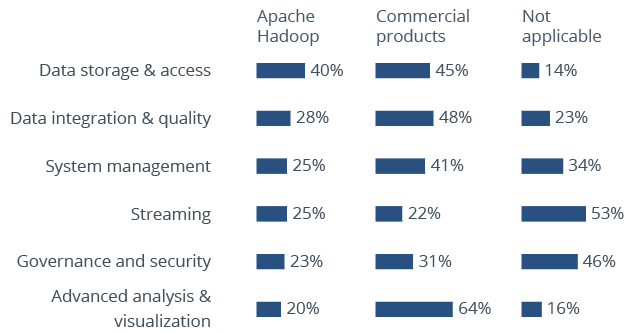

Tools for implementing Hadoop by software category (n=141)

Commercial tools and Hadoop distributions clearly dominate the categories of data integration and data quality (48 percent), system management (41 percent) and, especially, advanced analytics and visualization (64 percent). Data storage is the only area where the use of Apache Hadoop as an open source framework is very high in comparison to commercial tools or Hadoop distributions. Data storage with the Hadoop Distributed File System (HDFS) is one of the original basic functions of Hadoop and is therefore better known.

About half of participants use no tools for streaming (53 percent) or governance and security (46 percent). There appear to be no clearly defined products for these categories.

Hadoop and Data Lakes Report

Use Cases, Benefits and Limitations

Request the free report now